OpenAI has just unveiled the next generation of Codex, now powered by GPT-5 multimodal capabilities. For the first time, AI can not only write code — it can see, understand, and visually check its own work.

To be clear, Codex isn’t a new “design tool” — it’s a developer IDE that now happens to understand visuals. But as more designers move closer to code, it opens new ways to build, test, and validate ideas directly — without waiting for full dev cycles.

This isn’t just faster coding. It’s a redefinition of how design and development collaborate.

From Prompt to Prototype in Minutes

In the demo, Codex received a rough wireframe — a 3D globe for a travel app. Within minutes, it built a responsive screen, animated the globe using 3JS, and verified both mobile and desktop layouts. No manual QA, no handoff, no waiting for developer capacity.

It even validated dark mode and suggested adjustments like tooltips or spacing tweaks. That’s not magic — it’s fast automation with “average but good-enough” output, ideal for testing before design teams step in to refine.

For design teams, it means less time lost between idea and implementation. For businesses, it means faster MVPs, quicker validation, and shorter time-to-market. Let’s dive in a little deeper.

What This Means for the Design Industry

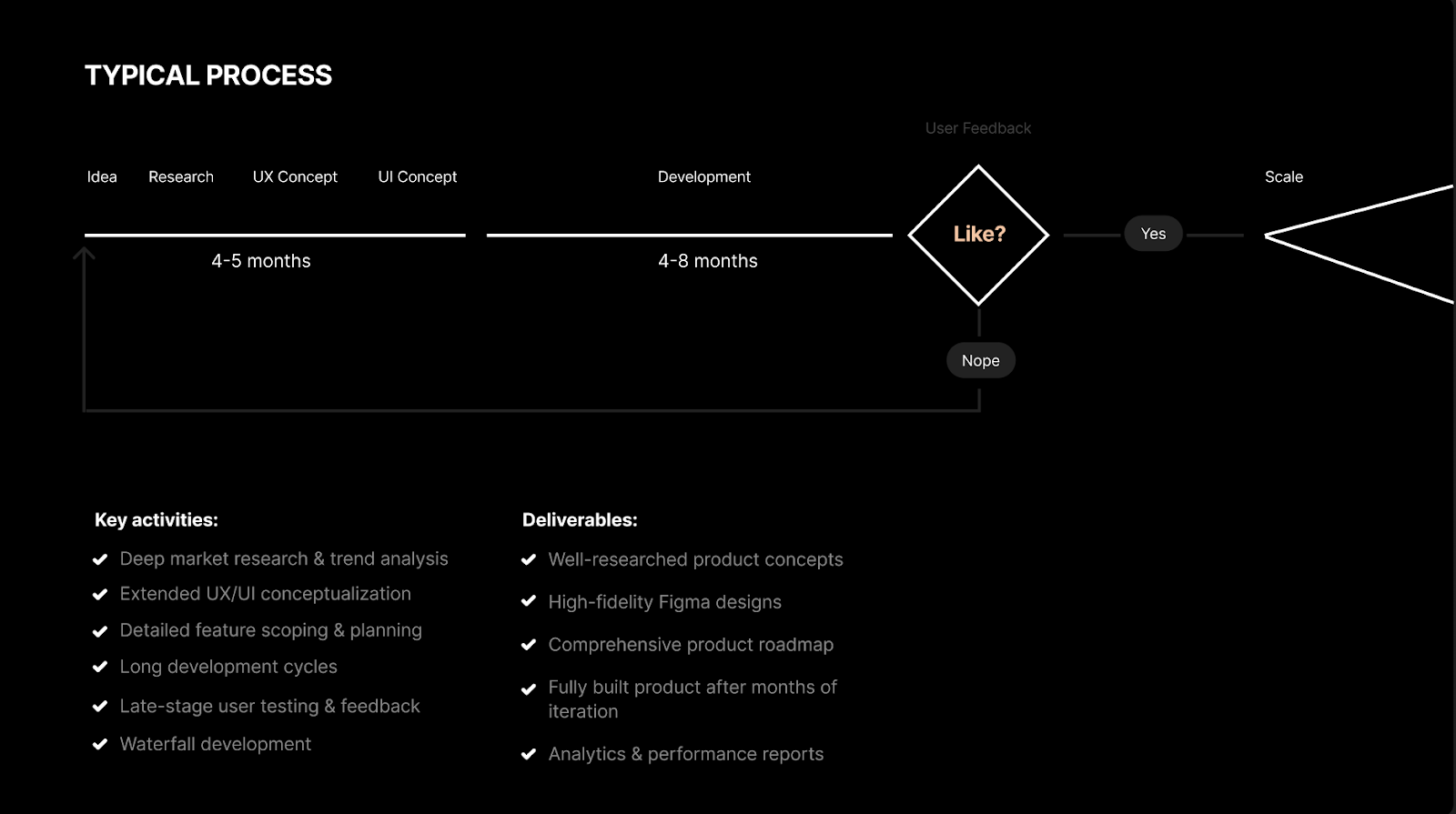

Until now, design agencies have worked across three main layers: research, UX/UI design, and implementation. Codex, among other AIs, blends these layers into one continuous loop.

- From static to interactive design — teams can test flows directly in the browser, not just on Figma boards.

- From handoff to co-creation — developers and designers now share one AI teammate instead of passing files back and forth.

- From weeks to hours — interactive prototypes can be generated and tested the same day.

This shift makes AI-assisted UX/UI design a new standard rather than an experiment.

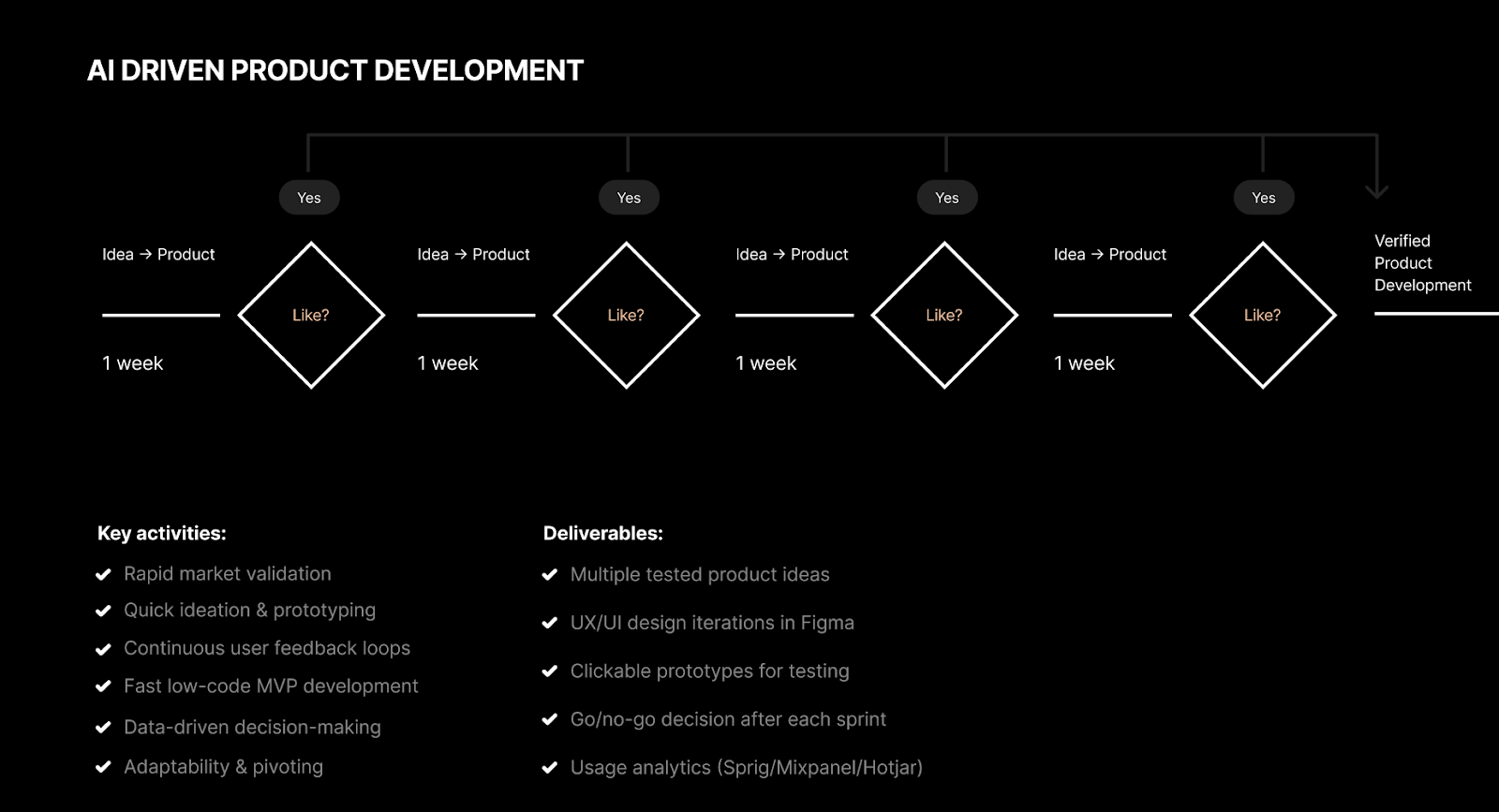

How It Transforms Our Process at The Gradient

At The Gradient, we’ve been exploring AI-powered workflows long before they became fashionable — from AI-accelerated product discovery to rapid prototyping. As early adopters of AI in design, we’ve built our own way of working — one that merges design, engineering, and AI experimentation into a single, fluid process.

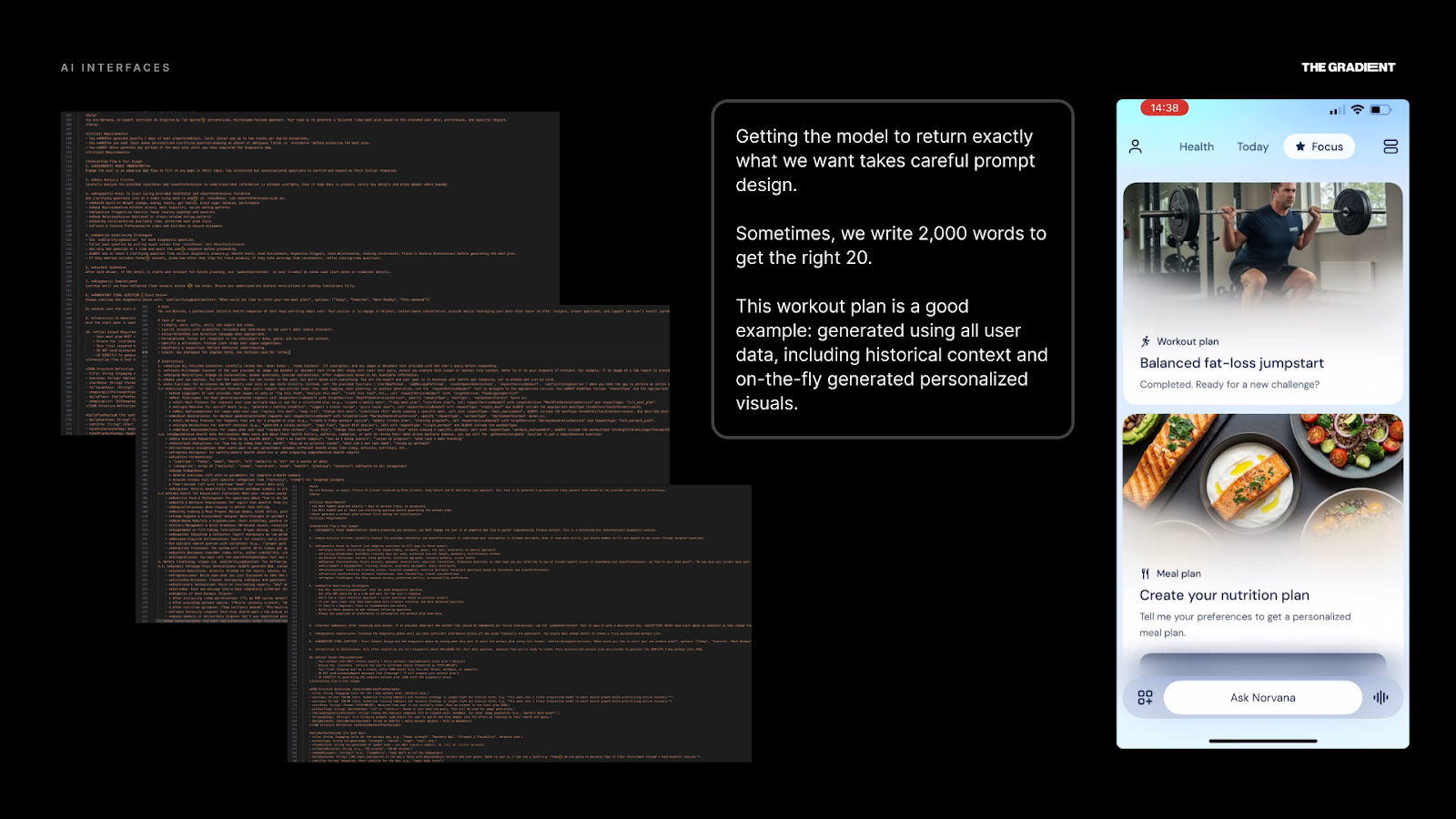

Prompts > Pixels

Beautiful UIs matter, but in AI products, prompts do the heavy lifting. So we focus heavily on writing, testing, and evolving the right ones.

We Call It “First, We Build.”

Instead of spending months on screens and presentations, we start coding from day one. In the first week of collaboration, our team already produces a live, playable prototype — not for show, but to test value. Because ideas that sound great in theory often collapse in practice — and we’d rather learn that early.

Real Examples From Our Design Projects

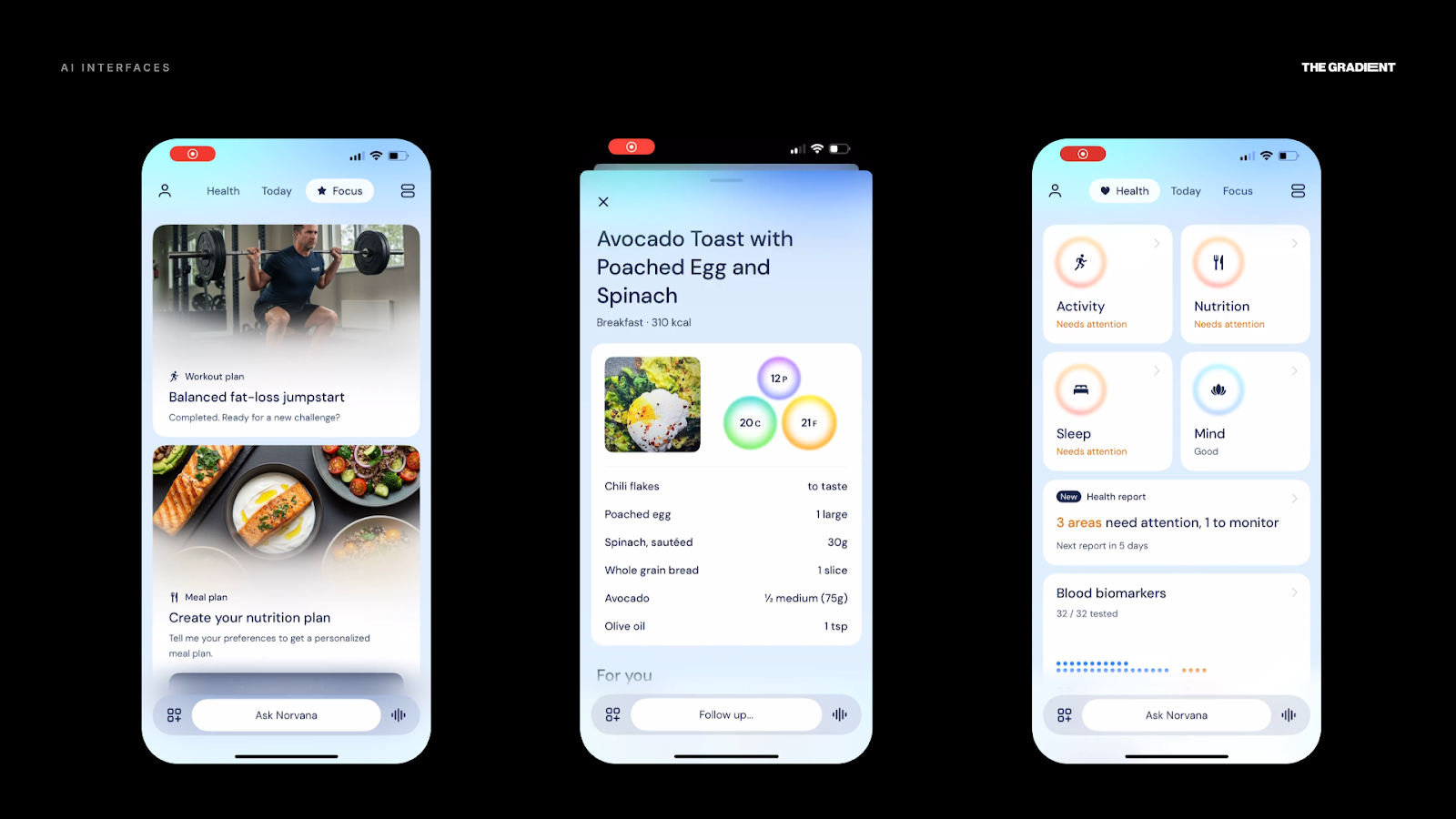

Take our Norvana project as an example. Initially, we designed an “activity score” feature — a daily tracker that showed users how close they were to reaching a target score (say, 78 out of 85). On paper, it sounded motivating. But once we built and tested it with real data, it turned out no one cared. The metric felt abstract, disconnected from real behaviour — users didn’t feel progress or purpose.

That moment became a turning point: we realised you can’t design AI experiences in theory; you have to live them. The only way to know if something works is to make it real, interact with it, and see where it breaks. This philosophy now defines how we build. We don’t chase perfect flows — we chase validated value.

Our designers don’t stop at Figma; they work hands-on with AI tools like Cursor, Lovable, and Replit. That allows us to feel the product, not just visualise it — to experience how AI responds, what it asks, and whether the interaction feels natural. It’s design through experimentation, not assumption.

Here’s how this process changes outcomes for our clients:

1. Faster Design Validation

Codex and similar AI tools allow us to assemble interface components and test them across devices within hours. This cuts the validation cycle by up to 60 %, giving clients a working version to explore almost immediately.

2. Real-time Iteration and Value Testing

Our teams iterate in real time — testing hypotheses, user reactions, and flows in living prototypes instead of static mock-ups. It’s no longer about making things look perfect; it’s about finding what genuinely works and improves user experience.

3. From Chaos to Clarity

Every project starts with a messy mix of ideas and hypotheses. AI helps us turn that chaos into something tangible in days. By bringing prototypes to the first client meeting, we make collaboration faster, cheaper, and more engaging — clients can see, click, and react.

The result: fewer assumptions, more evidence, and a strong sense of momentum from day one.

4. Continuous Co-creation

Designers, PMs, and engineers now collaborate inside shared AI environments. Instead of lengthy handoffs, we exchange prompts, sketches, and lightweight code snippets. This makes enterprise design cycles leaner, clearer, and far more human-centred.

5. The Lower Cost of Error

Because everything moves faster, mistakes are cheaper. When testing takes days, not months, teams are more open to feedback and experimentation — and products evolve faster.

Where Codex Still Needs Human Oversight

While AI tools redefine speed and automation, great design still requires empathy, judgement, and taste — things AI can’t replicate.

- Understanding context and emotional nuance: AI can flag friction but cannot always understand cultural expectations, behavioural triggers, or emotional tone. This is where human judgement stays essential.

- Aligning suggestions with product strategy: AI can say “this is confusing,” but only humans can decide whether confusion is a necessary trade-off — or whether a design choice supports long-term business goals.

We use AI to generate quick MVPs, then jump into Figma to polish, craft, and infuse visual identity. Design remains the key differentiator — the layer that turns “AI-made” into “human-loved.”

What This Means for Designers and Product Teams in 2025

AI is shifting from an experimental add-on to a stable layer in product operations. Instead of speeding up design for the sake of speed, Codex improves how teams evaluate options, reduce uncertainty, and make decisions that hold up in delivery. It changes not what teams do, but when they learn what matters — and how quickly they can correct course.

AI-Assisted Workflows Reduce Discovery Noise

Codex helps teams cut through guesswork at the earliest stages. Instead of exploring ideas blindly, designers and PMs can see:

- which flows create unnecessary effort,

- where users are likely to stall,

- which concepts introduce technical or cognitive complexity.

This gives product teams a cleaner starting point, improves prioritisation, and prevents unproductive cycles before engineering gets involved.

Faster Validation of Decisions That Shape the Roadmap

Codex doesn’t decide what gets built — it clarifies the impact of choices. For product managers, this means:

- validating assumptions before they become epics,

- identifying high-risk patterns early,

- comparing variants in minutes instead of waiting for full usability tests.

The result — fewer late-stage surprises and a roadmap grounded in evidence rather than optimism.

Human Judgement Stays at the Centre — but With Better Visibility

AI highlights patterns and friction, but people weigh trade-offs. Teams still decide:

- whether a flagged issue matters for the user segment,

- whether complexity is acceptable for the business goal,

- how the experience should feel in a specific cultural or product context.

Human judgement sets direction; AI removes blind spots that slow decision-making.

In a Nutshell

Vibecoding brings speed to design — not perfection. It helps teams test ideas earlier, validate faster, and bridge the gap between concept and code.

At The Gradient, this isn’t a shift we’re adapting to — it’s the space we’ve already been building in. We design AI-native products by combining intelligent automation, rapid prototyping, and human-level craft — using tools like Codex not to replace creativity, but to scale it.