Over the next two years, AI-powered smart glasses will move from experimental prototypes to practical, everyday tools. For enterprises in healthcare, retail/e-commerce and fintech, this is not just another hardware cycle. It is a shift in behaviour: users will no longer enter interfaces to find answers — answers will appear in the environment where decisions take place. IDC and Statista project 35–45% annual enterprise growth in AR/smart glasses from 2025 to 2029, with healthcare, retail, and financial services leading adoption.

At The Gradient, we help organisations prepare for this transition by designing experiences that feel natural, contextual, and aligned with real human decision-making. This article outlines what AI glasses will enable, why enterprise teams should pay attention now, and how to approach the shift strategically.

A New User Experience: From “Search and Act” to “See and Act”

Digital products have long relied on a predictable sequence: a user feels uncertain, opens an app, navigates, searches, interprets, and finally decides. Every journey assumes friction — switching context, remembering steps, finding the right feature. AI glasses break this pattern entirely.

Instead of the interaction beginning with an app, it begins with human attention. The system interprets what the user is looking at and provides support in real time. Guidance becomes immediate and embedded in the environment, not something the user must request.

This shift matters because users respond fastest when information arrives at the moment of intent. They feel more confident, less cognitively overloaded, and more grounded in the decision they are making. For enterprises, that confidence becomes the foundation of adoption, engagement, and long-term value — long before the hardware reaches mass scale.

Healthcare: Strengthening Decisions Through Real-Time Clarity

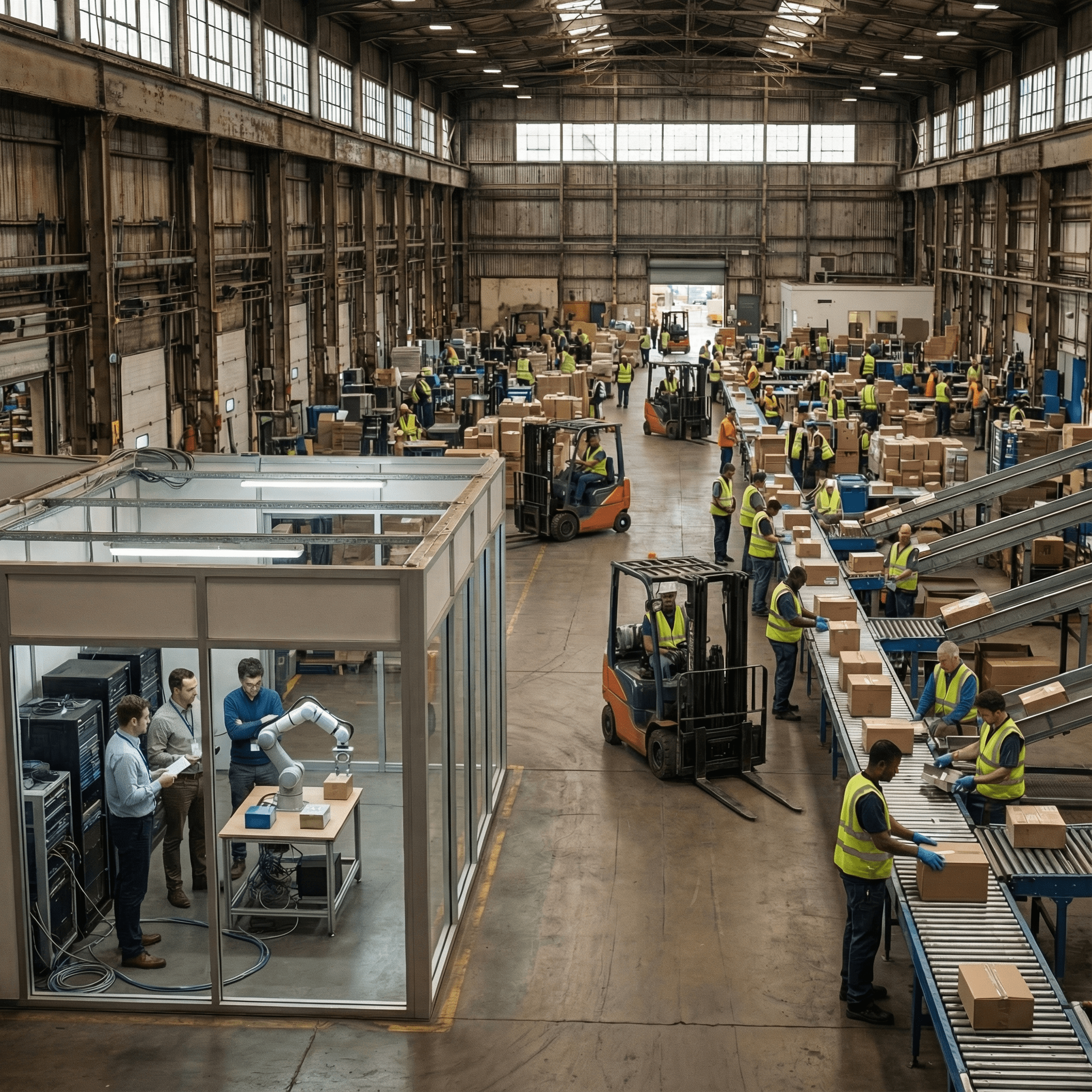

Healthcare is shaped by moments where timing, precision, and cognitive load directly influence outcomes. AI glasses enhance these moments not by adding complexity but by reducing uncertainty in the flow of work.

Clinicians and patients both operate in environments where spatial cues, context, and timing matter. AI glasses bring clarity to this space without asking anyone to break focus.

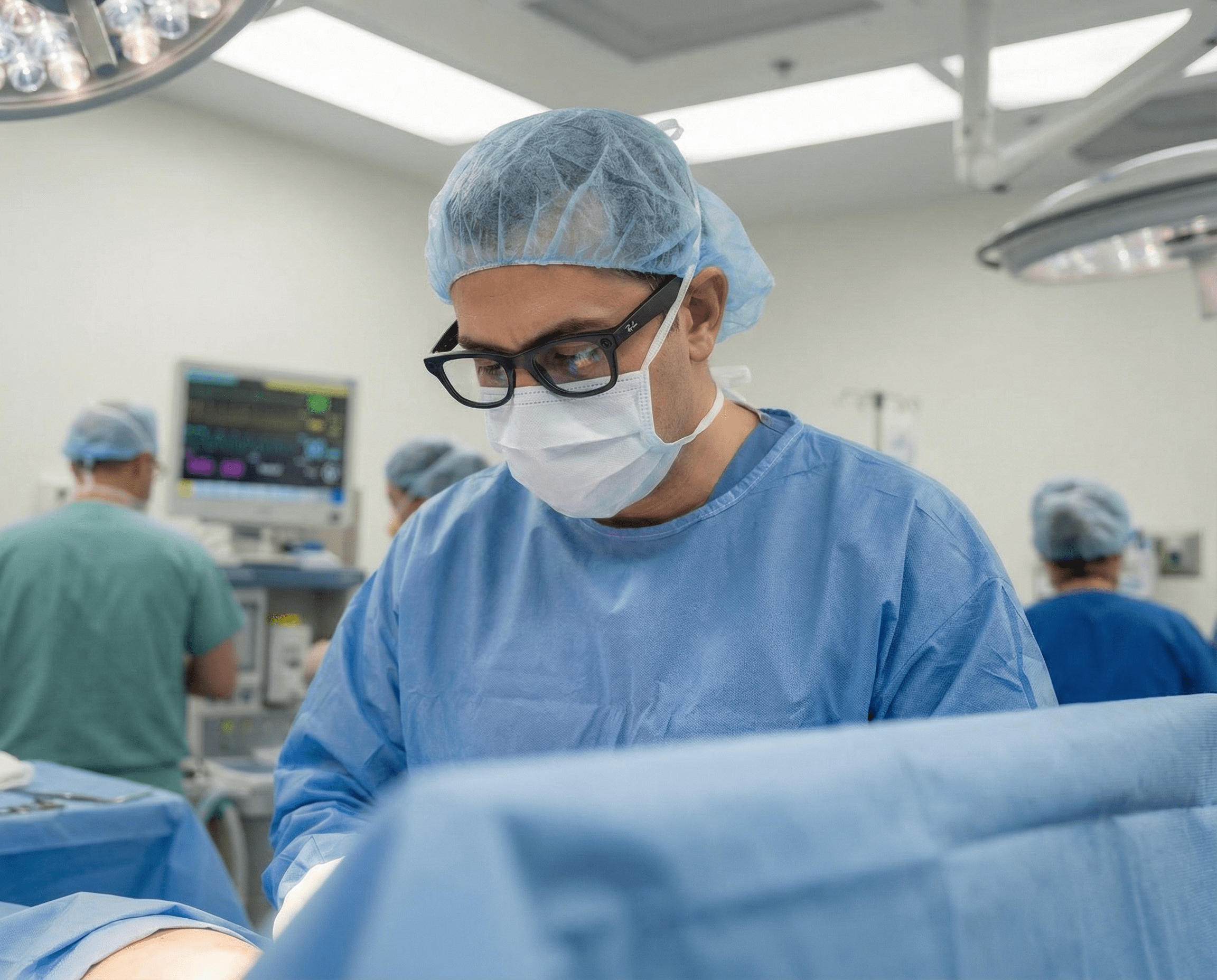

Clinical decisions become sharper at the point of care

When examining a wound, preparing a dose, or performing a procedure, clinicians often shift repeatedly between the patient and external systems. AI glasses remove that divide. Protocol reminders, visual highlights, and early warnings can appear directly in view without breaking attention. Deloitte’s research shows that when clinical decision-support systems surface guidance in real time, diagnostic errors decrease by 30–40% — a reflection of how much small, well-timed cues matter.

Patients follow care plans more naturally

Recovery depends on hundreds of small moments: how a patient positions themselves, breathes, moves, or takes medication. When instructions appear exactly when needed — instead of in an app, they must remember to open — adherence becomes more intuitive.

EY reports that such real-time adherence tools can reduce hospital readmissions by around 15%, demonstrating how behaviour improves when users feel guided rather than overwhelmed.

Training becomes contextual rather than theoretical

Clinical knowledge is often spatial: the angle of a hand, the order of steps, the physical cues to notice. With AI glasses, trainees learn these skills in real environments, supported by light prompts that build confidence and consistency early on.

Retail and E-commerce: Helping Customers Choose with Confidence

Retail decisions hinge on micro-moments: hesitation about fit, uncertainty about value, or a question about availability. AI glasses reduce this hesitation by placing clarity directly in the shopper’s line of sight, turning exploration into a more confident and informed journey.

Shoppers understand products instantly, without breaking flow

When a customer picks up an item, they can see availability, alternatives, materials or sustainability information immediately — no phones, no searching. This bridges the gap between e-commerce convenience and in-store tactility. McKinsey links this type of real-time personalisation to 20–30% higher conversion, driven by reduced hesitation and clearer decision pathways.

Discovery becomes intuitive rather than effortful

As shoppers move through a store, the system responds to what draws their attention — suggesting complementary items, highlighting features, or helping narrow down options. Recommendations become ambient rather than interruptive, making exploration smoother and more enjoyable.

Staff interactions become more confident and human

Store associates often carry the pressure of remembering extensive product and inventory details. With AI glasses, they stay fully engaged with customers while receiving discreet, contextual information that improves service quality.

Accenture reports that in-store environments supported by real-time AI can boost customer loyalty by up to 40%, driven by more consistent and confident interactions with staff.

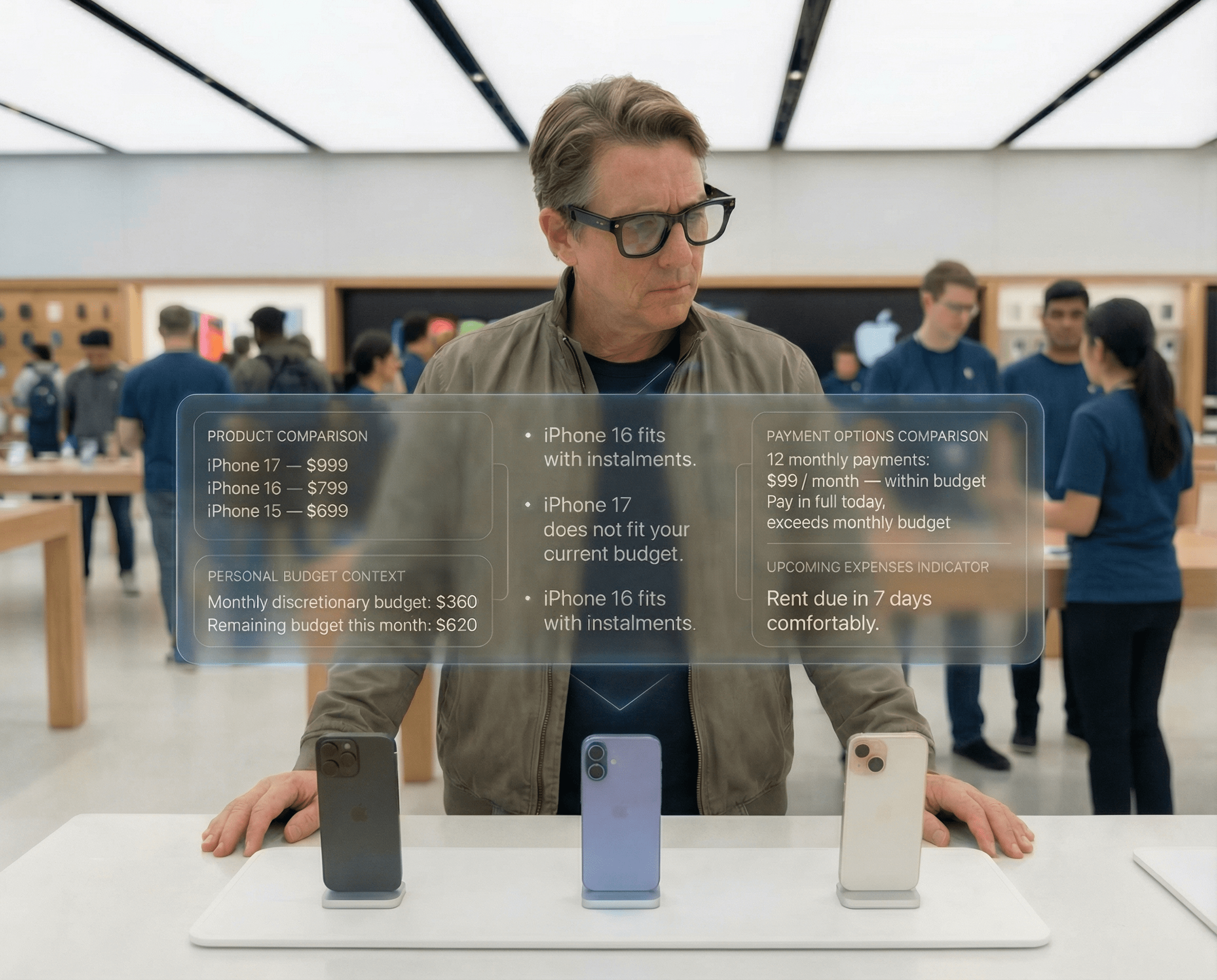

Fintech: Financial Awareness Where Decisions Actually Happen

Most financial decisions don’t occur within finance apps. They happen in real-life contexts — shops, restaurants, meetings, travel, and spontaneous purchases. AI glasses bring financial clarity directly into those moments.

Users make smarter decisions at the exact moment of purchase

A person considering a product might see whether it fits their budget, whether a different payment method is smarter, or how it aligns with upcoming expenses. This shift from retrospective analysis to real-time guidance changes engagement dramatically.

Bain & Company notes that such contextual decision-support can increase product engagement by up to 50%.

Expense tracking becomes frictionless and accurate

Instead of photographing or manually entering receipts, the glasses automatically recognise merchants, amounts, and categories. Users get accurate records without effort — and financial tools gain richer, more reliable inputs.

Authentication becomes secure without interrupting the journey

High-value flows often rely on multi-step verification that breaks the user’s focus. Multimodal authentication (voice, gaze, context) enables the same level of security while feeling more natural.

BCG reports that financial experiences that feel seamless and contextual can double user trust scores — a rare outcome in a category where trust is fragile.

Why Enterprises Should Prepare Now

Because the value is behavioural — not hardware-dependent — enterprise use cases will accelerate first. Teams that prepare early will gain:

- a clearer understanding of how users behave when information becomes spatial

- prototypes that test real-world interaction patterns before the market forms

- the ability to define expectations in their category

- readiness for rapid adoption when hardware matures

Products that feel natural in emerging interfaces outperform those adapted late. And spatial, real-time interaction is already becoming familiar through voice assistants, hands-free navigation, and multimodal AI.

How The Gradient Helps Enterprise Teams Prepare

At The Gradient, we specialise in designing AI-native experiences — including early explorations into spatial interfaces and real-time decision-support.

We help enterprise teams:

- map real-world behaviour where AI glasses would meaningfully shift user experience

- design multimodal layers that support decisions rather than distract from them

- prototype and test AI glasses scenarios in clinical, retail, and financial contexts

- extend design systems to accommodate spatial and hybrid interfaces

- evaluate technical readiness and ecosystem implications

Our goal is simple: build experiences that feel effortless for users and create meaningful value for the organisations behind them — long before AI glasses reach mainstream adoption.