Voice AI is no longer a “nice-to-have” capability or an experimental add-on. In 2026, it is increasingly becoming a core interaction layer in enterprise products — shaping how people access information, trigger actions, and make decisions inside complex digital systems.

This shift is driven by market behaviour, large-scale enterprise adoption, and measurable product outcomes. From finance and healthcare to operations and internal tools, voice is quietly moving from the edges of interfaces into their foundations.

The key change is not that products are becoming voice-first. It is that they are becoming voice-aware by design.

The Market Signal: Voice Is Becoming Infrastructure

When a technology attracts late-stage funding, enterprise clients, and platform-level integrations at the same time, it usually means it has crossed an important threshold. That is exactly what is happening with voice AI.

In early 2026, Deepgram raised $130 million, reaching a $1.3 billion valuation, to scale real-time speech recognition infrastructure already used by more than a thousand organisations, including large technology platforms and public-sector institutionsю

At the application layer, Parloa raised $350 million, tripling its valuation to roughly $3 billion, by building AI-powered voice agents for enterprise customer service used by companies such as Allianz, Swiss Life and Booking.com

Meanwhile, ElevenLabs, specialising in high-fidelity speech synthesis, raised $180 million at a $3.3 billion valuation in 2025 and entered 2026 with reported valuation discussions above $10 billion, driven increasingly by enterprise and public-sector demandю

Market forecasts reinforce this signal. Analysts project voice AI markets growing into the tens of billions of dollars over the next decade, with some enterprise-focused segments showing CAGR above 34%.

The implication is clear: voice is no longer treated as an interface experiment, but as part of core digital infrastructure.

Voice as a Fast Command Layer for Routine Actions

One of the clearest ways voice becomes a core interface is by acting as a direct command layer for routine, well-defined actions.

Enterprise products are full of interactions that are frequent but low in complexity — checking a status, triggering a process, or retrieving a known data point. Navigating deep UI hierarchies for these tasks creates friction that adds up quickly at scale.

Voice removes that overhead.This is why financial services were among the earliest adopters. At Bank of America, the AI assistant Erica allows customers to complete common actions — checking balances, reviewing spending, paying bills — through spoken requests. Since its launch, Erica has been used by more than 42 million Bank of America clients and has handled over 2.5 billion interactions, with the bank reporting that usage has continued to grow year over year.

By 2025, Bank of America reported that digital interactions across its platforms — including Erica — had surpassed 26 billion annually, underscoring how deeply voice is embedded into everyday banking workflows.

Crucially, Erica is reported to resolve around 98 % of customer queries without human intervention, significantly reducing contact-centre load while maintaining a high-quality user experience.

These are not pilot numbers. They reflect sustained, enterprise-scale adoption of voice as a primary interaction mechanism.

Voice as an Operational Interface in Hands-Busy Contexts

Voice also becomes core when physical context makes screens inefficient or impractical.

In environments such as warehouses, logistics centres or retail floors, stopping to interact with a screen interrupts work. Interaction needs to happen in parallel with physical activity.

Amazon has embedded voice interfaces into internal operational workflows to support exactly this scenario. Voice enables instructions, confirmations and information requests without interrupting physical tasks. In these contexts, voice is not a convenience feature — it is the interface that fits reality.

Voice as a Cognitive Layer in Complex Decision Systems

In knowledge-heavy enterprise products, voice plays a different role.

Healthcare, finance and analytics platforms rarely suffer from lack of data. They suffer from lack of clarity. Users are expected to interpret information, understand implications and decide what to do next — often under stress.

Here, voice functions as a cognitive layer, helping users understand what matters now rather than presenting more information.

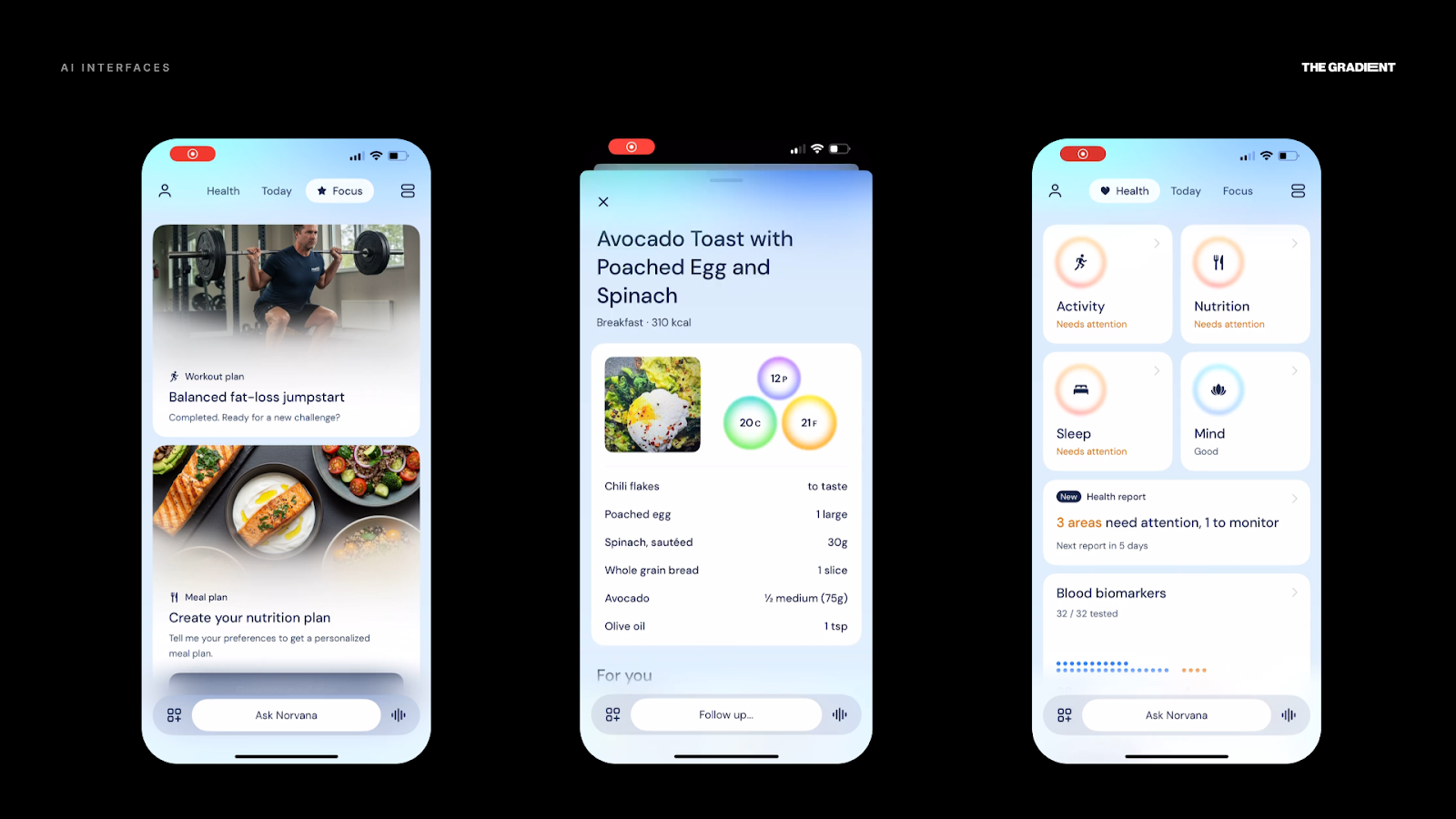

While working on Norvana, an AI-powered personal health companion, we observed this tension clearly. The product relies on charts, metrics and progress indicators, yet users often engage with it when tired, anxious or overwhelmed.

Norvana explored voice not as a replacement for the interface, but as a way to contextualise it. Voice interactions helped users interpret insights, ask questions and receive explanations without navigating complex UI. The visual layer remained essential, but voice reduced the cognitive effort required to use it. In this model, voice does not control the product. It translates it.

Voice as a Productivity Shortcut Inside Existing Tools

For enterprise CRM platforms, voice capabilities are no longer an add-on. Modern systems embed AI-powered voice assistants directly into the CRM workflow, enabling natural speech interactions that reduce friction and transform how users access data and execute tasks.

For example, Salesforce’s Outloud — AI Voice Assistant for Salesforce demonstrates how voice can be used not just to retrieve information, but to interact with CRM data conversationally. In the demo, users speak naturally to the assistant to update records, fetch insights, and manage tasks directly within the Salesforce interface — all without manual typing or navigation.

This evolution aligns with Salesforce’s broader voice strategy, where Agentforce Voice (Salesforce’s native AI voice agent platform) enables context-aware conversational automation anchored in CRM data, real-time actions, and low-latency responses across channels.

This reinforces a key principle: voice creates value when it supports workflows rather than redefining them.

Where Voice Is Stronger — and Where It Isn’t

Voice is not universally superior to traditional interfaces. It excels when tasks are simple, repetitive or time-sensitive. It lowers cognitive load and improves accessibility, particularly for users with visual or motor constraints.

At the same time, voice performs poorly when users need to compare options, explore data or maintain a strong sense of control. Visual reasoning still dominates these interactions.

This is why voice-first enterprise products often fail. They attempt to replace interfaces where augmentation would be more effective.

Why This Moment Matters for Enterprise Product Leaders

For many teams, voice AI still sits in the “interesting, but later” category. That hesitation is understandable — but increasingly risky.

Infrastructure maturity means latency, accuracy and scalability are no longer blockers. Enterprise adoption is real. And early movers are already shaping new interaction norms that will soon feel baseline.

Voice will not suddenly replace interfaces. But it will quietly redefine what efficient interaction looks like.

The Gradient: Designing Voice with Restraint

At The Gradient, we approach voice not as a headline capability, but as a UX layer that must earn its place.

We design voice where it reduces cognitive or operational effort — and avoid it where visual reasoning remains essential. In AI-native products like Norvana and in complex enterprise systems alike, voice works best when it supports understanding rather than competes for control. Voice is powerful not when it speaks more, but when it asks less of the user.

FAQ: Voice AI in Enterprise Products

What is voice AI in enterprise products?

Voice AI in enterprise products is a spoken interaction layer embedded into digital systems that allows users to trigger actions, access information, or receive guidance using natural language. Unlike consumer voice assistants, enterprise voice AI is tightly integrated with business logic, internal data, and workflows, and is designed to meet requirements around accuracy, security, and scalability.

How do enterprises actually use voice AI today?

Enterprises use voice AI as a fast command layer for routine actions, as a hands-free interface in operational environments, as a cognitive guide in complex systems such as healthcare or finance, and as a productivity shortcut inside tools like CRMs. In most cases, voice complements existing UI rather than replacing it.

Why is voice AI gaining traction now?

Voice AI adoption accelerated because key barriers have been removed. Speech recognition accuracy and latency now meet production standards, AI models can reliably interpret intent and context, and enterprises can measure ROI through reduced handling time and improved efficiency. Increased late-stage investment also signals long-term viability.

Is voice AI better than traditional user interfaces?

Voice AI is better in specific contexts. It outperforms traditional UI for simple, repetitive or time-sensitive tasks and in situations with high cognitive or physical load. Visual interfaces remain superior for comparison, exploration and analysis